A few months back I was playing around with a hobby project that required me to translate speech to text and pass it further to Azure OpenAI for analysis. Working with Azure daily, using the Azure AI Speech service seemed like a good tool for the job. In this blog post I'll provide some examples and observations on how to set up and use the service from a Go application.

Note that at the time of me playing around with the solution, I did not yet have access to the OpenAI Whisper model in Azure. Since then I've gotten some experience with it too, and would recommend you looking into that as an option as well. For this specific use case it might even be better as I feel it does a little bit of a better job in detecting the Finnish language correctly and as you'll see later in this post, the setup might not be quite as complex on the Go side. Microsoft has provided a decent recommendation checklist on which model to choose for your use case here.

Setting up the Azure AI Speech service with Bicep

The Azure AI Speech service is based on the Microsoft.CognitiveServices/accounts resource and just requires a tiny bit of configuration to get working. If you're interested in the full examples, see the files here.

resource account 'Microsoft.CognitiveServices/accounts@2023-05-01' = {

name: speechServiceName

location: location

kind: 'SpeechServices'

sku: {

name: 'S0'

}

properties: {}

}

I'm also using a Azure Key Vault to store some of the secrets my application needs. You can also go to the portal to get these values from the created resource if you want to skip this part. I like to do the secret creation in the same Bicep module as the resource.

resource keyVault 'Microsoft.KeyVault/vaults@2022-07-01' existing = {

name: keyVaultName

}

resource speechKey 'Microsoft.KeyVault/vaults/secrets@2023-02-01' = {

parent: keyVault

name: 'speechKey'

properties: {

value: account.listKeys().key1

}

}

resource speechRegion 'Microsoft.KeyVault/vaults/secrets@2023-02-01' = {

parent: keyVault

name: 'speechRegion'

properties: {

value: account.location

}

}

resource speechEndpoint 'Microsoft.KeyVault/vaults/secrets@2023-02-01' = {

parent: keyVault

name: 'speechEndpoint'

properties: {

value: account.properties.endpoint

}

}

Then the only thing left to do is to deploy the resources. I prefer the Az modules in PowerShell.

# Just an example

New-AzResourceGroupDeployment -ResourceGroupName "myrg" -TemplateFile ./deployment/bicep/main.bicep

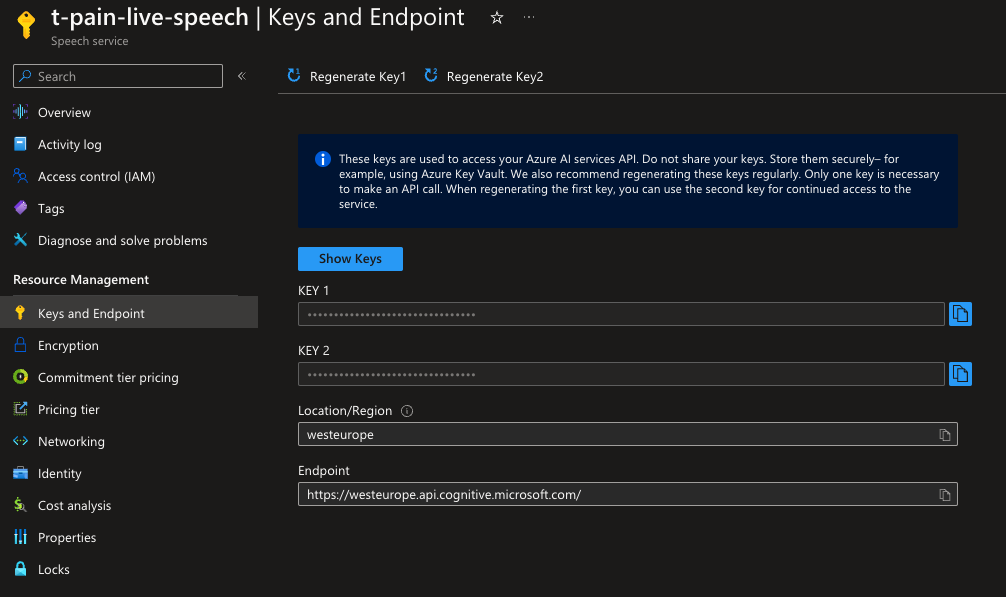

You can get the endpoint and keys from the portal later as well:

Installing the Speech SDK for Go

This is where things get a bit iffy: The SDK has a C++ library it depends on, and thus CGO is also required to run it. This was personally my first time of running anything with CGO and the setup definitely took some tinkering to get working. Add on top that the official documentation does not give any guidance on installing on a Mac. Some of the instructions however can be found here.

Download the Speech SDK

export SPEECHSDK_ROOT="/your/path" //for me thsi was /Users/pasi/speechsdk

mkdir -p "$SPEECHSDK_ROOT"

wget -O MicrosoftCognitiveServicesSpeech.xcframework.zip https://aka.ms/csspeech/macosbinary

unzip MicrosoftCognitiveServicesSpeech.xcframework.zip -d "$SPEECHSDK_ROOT"

Set Environment Variables required by CGO

// I added these in my Go project's build configs

CGO_CFLAGS=-I/Users/pasi/speechsdk/MicrosoftCognitiveServicesSpeech.xcframework/macos-arm64_x86_64/MicrosoftCognitiveServicesSpeech.framework/Headers;

CGO_LDFLAGS=-Wl,-rpath,/Users/pasi/speechsdk/MicrosoftCognitiveServicesSpeech.xcframework/macos-arm64_x86_64 -F/Users/pasi/speechsdk/MicrosoftCognitiveServicesSpeech.xcframework/macos-arm64_x86_64 -framework MicrosoftCognitiveServicesSpeech

If you're on Debian/Ubuntu, you will need to install OpenSSL 1.x too

wget -O - https://www.openssl.org/source/openssl-1.1.1u.tar.gz | tar zxf -

cd openssl-1.1.1u

./config --prefix=/usr/local

make -j $(nproc)

sudo make install_sw install_ssldirs

sudo ldconfig -v

export SSL_CERT_DIR=/etc/ssl/certs

// As well as set the LD_LIBRARY_PATH correctly

export LD_LIBRARY_PATH=/usr/local/lib:$LD_LIBRARY_PATH

You can check my installation steps for the container my app runs on from the Dockerfile here.

Lastly we still need to install the Go packages:

go get -u github.com/Microsoft/cognitive-services-speech-sdk-go

Using the speech service in your code for speech-to-text

I found the quickstart of using the service somewhat difficult to parse. Thankfully the examples had a ready-made wrapper I could augment.

You can find all my code for the package here.

You can also find the Go package documentation here.

My use case was to take in an URL from a Telegram bot and convert the audio from that to text. Let's walk through some of the code.

For making testing a bit easier I implemented an interface for the Wrapper. You might not need it.

type Writer interface {

Write(p []byte) (err error)

}

type Wrapper interface {

StartContinuous(handler func(event *SDKWrapperEvent)) error

StopContinuous() error

Writer

}

In the NewWrapper constructor, I'm also setting the autodetection of the language of the speech to Finnish. I first tested this with having multiple options, but Finnish sometimes got confused for the other languages causing problems. I ended up just commenting the others out as my users speak Finnish.

autodetect, err := speech.NewAutoDetectSourceLanguageConfigFromLanguages([]string{

// TODO: Add to config instead

//"en-US",

//"en-GB",

"fi-FI",

})

To create a new wrapper we need the key and the region of the Speech service, and then we can call the function to process our file found at an URL:

recognizer, err := speechtotext.NewWrapper("mykey", "westeurope")

text, err = speechtotext.HandleAudioLink("someURL", recognizer)

All the rest of the logic is from the HandleAudioLink function

First I download a file to disk, and defer it's deletion as cleanup:

wavFile, err := handleAudioFileSetup(url)

if err != nil {

return "", err

}

defer deleteFromDisk(wavFile)

Then I will also set up a goroutine to pump the contents of the file to a stream the speech SDK will read

stop := make(chan int)

ready := make(chan struct{})

go PumpFileToStream(stop, wavFile, wrapper)

// The actual implementation in the wrapper

func PumpFileToStream(stop chan int, filename string, writer Writer) {

file, err := os.Open(filename)

if err != nil {

fmt.Println("Error opening file: ", err)

return

}

defer file.Close()

reader := bufio.NewReader(file)

buffer := make([]byte, 3200)

for {

select {

case <-stop:

fmt.Println("Stopping pump...")

return

case <-time.After(1 * time.Millisecond):

}

n, err := reader.Read(buffer)

if err == io.EOF {

err = writer.Write(buffer[0:n])

if err != nil {

fmt.Println("Error writing last data chunk to the stream")

}

return

}

if err != nil {

fmt.Println("Error reading file: ", err)

break

}

err = writer.Write(buffer[0:n])

if err != nil {

fmt.Println("Error writing to the stream")

}

}

}

Next we need to tell the SDK what should happen when the SDK produces events during reading. In my case I mostly want to concat the results in a slice of strings. I also have a bug still in the code, so your mileage may vary.

var resultText []string

log.Println("Starting continuous recognition")

err = wrapper.StartContinuous(func(event *SDKWrapperEvent) {

defer event.Close()

switch event.EventType {

case Recognized:

log.Println("Got a recognized event")

resultText = append(resultText, event.Recognized.Result.Text)

case Recognizing:

case Cancellation:

log.Println("Got a cancellation event. Reason: ", event.Cancellation.Reason)

close(ready)

if event.Cancellation.Reason.String() == "Error" {

log.Println("ErrorCode:" + event.Cancellation.ErrorCode.String() + " ErrorDetails: " + event.Cancellation.ErrorDetails)

}

// TODO: If we receive an error here, the writing to the stream should be stopped. Currently that does not seem to happen.

}

})

// The only thing the StartContinous does is basically call your functions

func (wrapper *SDKWrapper) StartContinuous(callback func(*SDKWrapperEvent)) error {

if atomic.SwapInt32(&wrapper.started, 1) == 1 {

return nil

}

wrapper.recognizer.Recognized(func(event speech.SpeechRecognitionEventArgs) {

wrapperEvent := new(SDKWrapperEvent)

wrapperEvent.EventType = Recognized

wrapperEvent.Recognized = &event

callback(wrapperEvent)

})

wrapper.recognizer.Recognizing(func(event speech.SpeechRecognitionEventArgs) {

wrapperEvent := new(SDKWrapperEvent)

wrapperEvent.EventType = Recognizing

wrapperEvent.Recognizing = &event

callback(wrapperEvent)

})

wrapper.recognizer.Canceled(func(event speech.SpeechRecognitionCanceledEventArgs) {

wrapperEvent := new(SDKWrapperEvent)

wrapperEvent.EventType = Cancellation

wrapperEvent.Cancellation = &event

callback(wrapperEvent)

})

return <-wrapper.recognizer.StartContinuousRecognitionAsync()

}

Lastly, we check errors and need to stop the continuous reading once we have either read the whole file, or after an arbitary timeout. I set this to two minutes, but you should change for your use case.

if err != nil {

return "", err

}

select {

case <-ready:

err := wrapper.StopContinuous()

if err != nil {

log.Println("Error stopping continuous: ", err)

}

case <-time.After(120 * time.Second):

close(stop)

_ = wrapper.StopContinuous()

return "", fmt.Errorf("timeout")

}

defer wrapper.StopContinuous()

if len(resultText) == 0 {

return "", fmt.Errorf("only got empty results")

}

return strings.Join(resultText, " "), nil

You can also use the SDK for reading non-continuously, but I did not look further into that.

And that's about it for my use case. I think that the newer Whisper model from the Azure OpenAI service could have been a better fit, but I think the AI Speech service is also a good option.